Putting it all together

Before we arrive at the last major section of this chapter to look at an actual case study and best practices, we felt it is helpful to put all the generative AI categories together and understand how data flows from one into another and vice-versa.

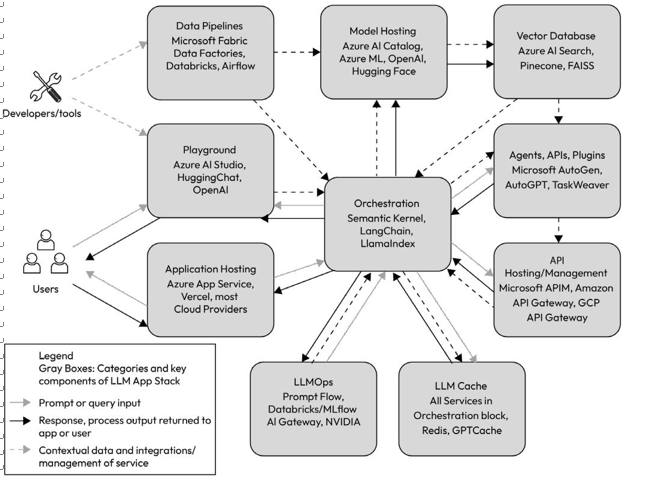

Earlier, we shared the CI/CD pipeline flow using Prompt Flow within the LLMOps construct. Now, we will take a macro look, beyond just the LLM, at how the LLM application development stack messages would flow across the generative AI ecosystem, using categories to organize the products and services.

While we do not endorse any specific services or technology, except our employer, our goal here is to show how a typical LLM flow would appear using various generative AI toolsets/products/services. We have organized each of the workloads by category, represented in the light gray boxes, along with a few of the products or services, as examples within each category. Then, we use arrows to show how typical traffic flow would occur, from queries submitted by users to the output returned to the users, and the contextual data provided by developers to the conditioned LLM outputs. The contextual data may include fine-tuning, RAG, and other techniques that you have learned in this book, such as single-shot, few-shot, etc.:

Figure 6.12 – LLM end-to-end flow with services

LLMOps – case study and best practices

With a Fortune 50 company based in the US, in the professional services industry, they had already been working with AI tools and using both Azure OpenAI and Azure ML in the cloud for almost a year. This organization was expanding its successful generative AI pilot worldwide and needed a repeatable way to develop, test, and deploy LLMs for its internal employees. Below are steps we wanted to share so others can know what to expect when applying an LLMOps strategy to an already existing generative AI ecosystem within an organization:

LLMOps field case study

- Executive vision and LLMOps strategy: For any organization to use LLMOps/generative AI/AI successfully, leadership buy-in and support are essential for the business groups and teams to then build out a repeatable framework. We had already gone through the journey of manually deploying models, and so next, we helped the CIO and his direct staff create a solid LLMOps strategy using the guidelines that we described earlier in this chapter. We helped review the company’s most beneficial generative AI projects and provide suggestions on automating most of their processes using LLMOps to boost business performance and achievement.

- Demos, demos and more demos: To help create the vision and ideation, we went through a number of demos which included generative AI and playing with a number of LLM models for those newer to the technology and demos on LLMOps using Prompt Flow for their ML data scientists and software developers.

- Training: In order to fully grasp the concepts of using generative AI tools and help improve the client’s knowledge and skills, we recommended both generative AI and Azure OpenAI training for those newer to generative AI subject and help ensure this customer’s internal teams are skilled and informed about the technologies they will be using, operationalizing and managing. This also included custom-created LLMOps training as well for the developer teams and training on Microsoft Semantic Kernel, as both LLMOps and SK were very new to the organization. They were eager to use an orchestration platform to be more agile in their generative AI approach while reducing the cumbersome management of the large technical stack they had already deployed. Semantic Kernel and LLMOps allowed for a more refined generative AI deployment methodology.

- Hands-on hackathon: To establish comfort in the tools and technologies, a hands-on “hackathon” was set up, where we took a few existing business challenges where their current processes were not working on non-existing and addressed them in a large group setting over multiple days.

- LLMOps pilots: We next assisted two different teams responsible for the development and operational support for the organization to help pilot the LLMOps strategy and processes. We took a lot of the learning, behavior and feedback and refined the process. Recall LLMOps is not only the people and technology/platforms, it is also about processes. In order to successfully implement LLMOps, we needed these various teams within the organization to define and adopt these newly agreed upon processes. Fortunately, this organization already had a well-established DevOps and Mops process in place, so adopting an LLMOps strategy and applying the processes was not a drastic disruption in business.

In summary, this Fortune 500 organization has enjoyed the streamlined processes that LLMOps has to offer from the first design and development stage during the hackathon event to the evaluation and refinement in the final stage during the pilots