Platform – using Prompt Flow for LLMOps

Microsoft’s Azure Prompt Flow facilitates LLMOps integration for your organization, streamlining the operationalization of LLM applications and copilot development. It offers customers secure access to private data with robust controls, prompt engineering, continuous integration and deployment (CI/ CD), and iterative experimentation. Additionally, it supports versioning, reproducibility, deployment, and incorporates a layer for safe and responsible AI. In this section, we will cover how Azure Prompt Flow can help you implement LLMOps processes:

Figure 6.10 – LLMOps Azure AI Prompt Flow diagram

Let’s describe the preceding, Figure 6.10, to describe the Prompt Flow stages:

- In the top-most section, the Design and Development stage is where machine learning professionals and application developers create and develop prompts. Within this area, you work

with LLMs by testing and trying out different prompts and using advanced logic and control flow to make effective prompts. With Prompt Flow, developers can make executable flows that connect LLMs, prompts, and Python tools through a clear, visualized graph.

- In the intermediate (middle) Evaluation and Refinement stage, you assess the prompts for factors such as usefulness, fairness, groundedness, and content safety. Here, you also establish and measure prompt quality and effectiveness using standardized metrics. Prompt flow allows you to build prompt variants and assess and compare their results through large-scale testing, using pre-built and custom evaluations.

- At the final stage at the bottom of the image, in the Optimization and Production stage, you can track and optimize your prompts for security and performance. You will also need to collaborate with others to get feedback. Prompt Flow can assist by launching your flow as an endpoint for real-time inference, test that endpoint with sample data, monitor telemetry for latency and continuously track performance against key evaluation metrics.

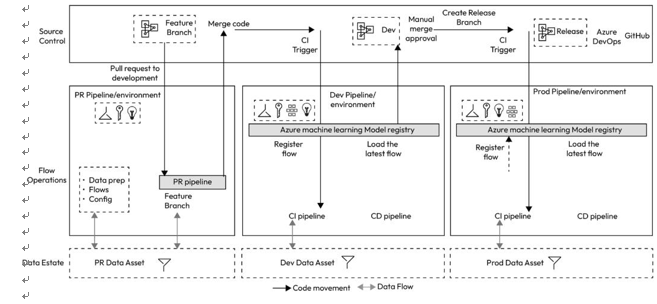

While the preceding image is a simplified view on how to approach Prompt Flow and understand it, let’s look at Prompt Flow and trace the steps through its deployment within an organization. In the following informational graphic image, taken from the Microsoft public website,LLMOps with Prompt Flow and GitHub (reference link at the end of this chapter), there is a graphical description of Prompt Flow deployment activities.

There are quite a few steps involved in Prompt Flow, and we will not go into too much detail here, leaving you with a link to explore this further (there is both a link to the main Microsoft website for additional documentation and the GitHub site, which has a compelling hand-on exercise in which you can follow along and learn).

Figure 6.11 – A summary of the Prompt Flow CI/CD deployment sequence

As you can tell from the robustness of the preceding image, Prompt Flow empowers you and your organization to confidently develop, rigorously test, fine-tune, and deploy CI/CD flows, allowing for the creation of reliable and advanced generative AI solutions, aligned to LLMOps.

In the preceding image, there are three main environments: PR, Dev and Prod. A PR environment, or pull request, is a short-lived environment containing changes that require review before being merged into the Dev and/or Prod environments. Oftentimes, the PR environment is called a test environment. You can get more detailed information on setting up PR and other environments at Review pull requests in pre-production environments.

There are a number of steps in LLMOps Prompt Flow deployment:

- The initialization stage is where the LLMOps data are prepared in a stage/test environment, such as data preparation and the entire environment setup.

- As with any developer tools that help author CI/CD pipelines, you can then pull requests from the feature branch to the development branch, which will then execute the experimentation flow, as described in the preceding image.

- Once approved, the generative AI code is merged from the Dev branch into the main branch, and the same process repeats both for the Dev environments and the Prod environment, in the middle and right of the image above.

- All of the CI/CD processing is facilitated with the Azure Machine Learning model registry environment, which makes it easy to keep track of and organize various models, from generative AI models to traditional ML models, and this also connects to other model registries/repositories such as Hugging Face.

The LLMOps CI/CD steps can all be managed using Azure DevOps or GitHub. There are a number of steps and details which are better understood with practice. Building this process flow using the Prompt Flow hands -on lab on our GitHub repo will give you the practice, better understanding, and experience you may need. Check out this accelerator on deploying your Prompt Flow CICD pipelines: https://github.com/microsoft/llmops-promptflow-template.

Important note

While we have discussed various LLMOps practices, we have not delved into the integration of autonomous agents due to the novelty of this field and the limited number of agent-based applications currently in production. Many such applications are still in the research phase. However, we anticipate that autonomous agents will soon become a significant aspect of LLMOps practices.