Benefits of LLMOps

- Automation and Efficiency: Automation significantly reduces the duplication of efforts when introducing a new use case into production. The workflow, encompassing data ingestion, preparation, fine-tuning, deployment, and monitoring, is automatically triggered. This streamlining makes the entire process of integrating another use case much more efficient.

- Agility: LLMOps accelerates model and pipeline development, enhances the quality of models, and speeds up deployment to production, fostering a more agile environment for data teams.

- Reproducibility: It facilitates the reproducibility of LLM pipelines, ensuring seamless collaboration across data teams, minimizing conflicts with DevOps and IT, and enhancing release velocity.

- Risk mitigation: LLMOps enhances transparency and responsiveness to regulatory scrutiny, ensuring greater compliance with policies and thereby mitigating risks.

- Scalability management: Enables extensive scalability and management capabilities, allowing for the oversight, control, management, and monitoring of thousands of models for continuous integration, delivery, and deployment.

Comparing MLOps and LLMOps

While it is evident that MLOps is to machine learning as LLMOps is to LLMs, LLMOps shares many similarities and has some differences with MLOps. While some of our readers may already be familiar with machine learning and using MLOPs, with LLMOps, we do not have to go through expensive model training, as the LLM models are already pretrained. However, in our LLMOps process, as described in the “discover and tune” section, we still have the discovery process (to determine which LLM model, or models, would fit our use case), the tuning of the prompts using prompt engineering or prompt tuning, and, if necessary, and the fine-tuning of our models for domain-specific grounding.

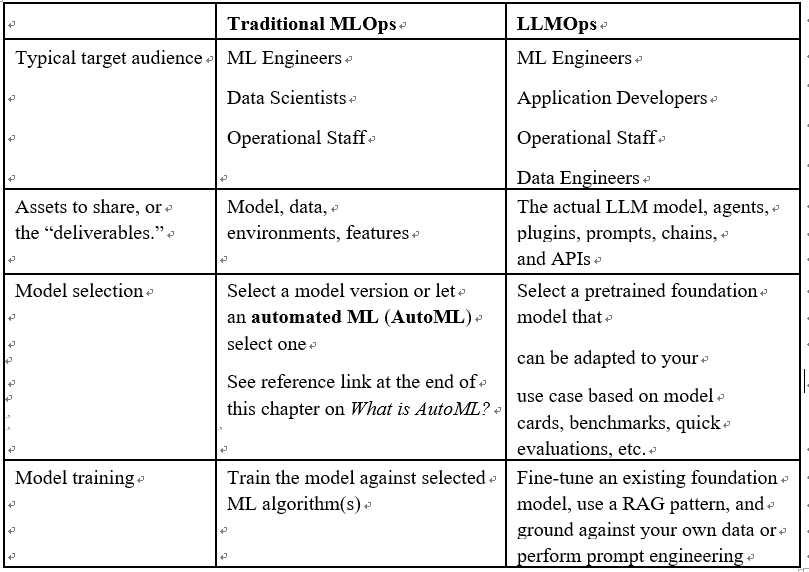

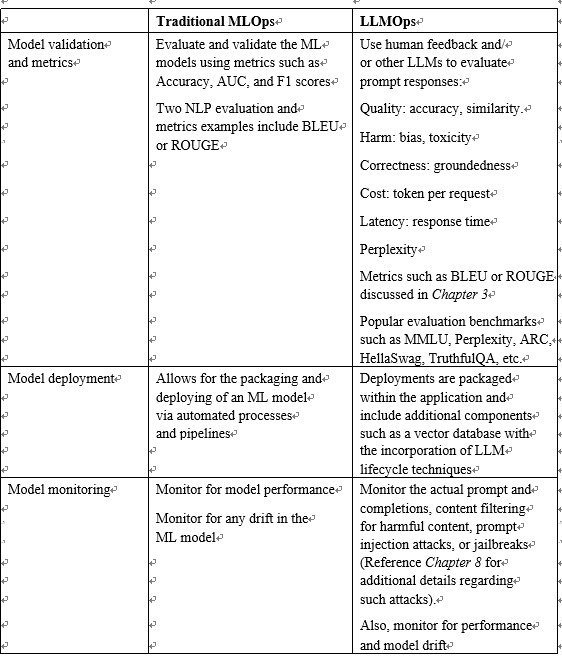

Later in this chapter, we will look at a real-life use case where LLMOps played a critical role in a large organization’s management of LLMs; however, for now, it may be beneficial to do a side-by-side comparison of the two in a chart (Figure 6.9) to understand how the two relate and where they differ:

Figure 6.9 – Comparing MLOps and LLMOps

Hopefully, this summarized table provides some insights into which components of MLOps and LLMOps are similar and where there are differences.

You should now have a foundational knowledge of LLMOps and it’s core component, the LLM lifecycle. As mentioned earlier, while these processes and procedures may seem a bit tedious, the benefits reaped are repeatable, safe generative AI practices within your organization. Teams can achieve faster model and pipeline deployments while providing higher-quality generative AI applications and services.

For that “tedious” part, there are services that can streamline the LLMOps process. One such service is known as Azure Prompt Flow.